Introduction

Simultaneous Localization and Mapping (SLAM) is a technique used in robotics and computer vision to create maps of unknown environments and simultaneously determine the location of the robot or camera within those maps. SLAM is an important problem in robotics as it enables robots to operate autonomously in unknown or changing environments.

What is SLAM?

The goal of SLAM is to create a map of an environment while simultaneously estimating the pose (position and orientation) of a robot or camera in that environment. The environment can be represented on the map in 2D or 3D, and the robot or camera can be fitted with sensors to measure the environment, such as cameras, laser rangefinders, or sonar sensors.

The SLAM challenge is difficult because the robot or camera must simultaneously assess its stance and map the surroundings. Because the robot or camera needs to know its position to generate the map and needs to know the map to accurately estimate its position, this is referred described as a “chicken-and-egg” conundrum.

What role does it play in Augmented Reality?

SLAM (Simultaneous Localization and Mapping) plays a crucial role in augmented reality (AR) applications. AR is a technology that overlays virtual objects or information onto the real world, creating an immersive experience for the user. SLAM is used in AR to accurately track the user’s position and orientation in the real world and to align virtual objects with the real-world environment.

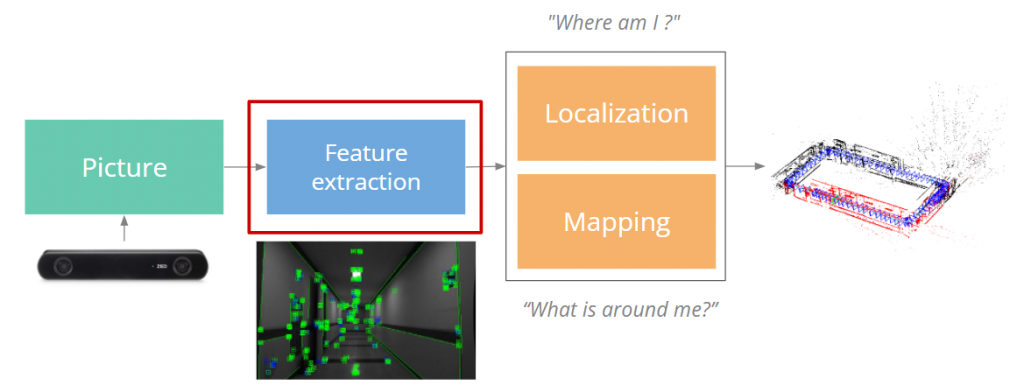

In AR, SLAM algorithms use the camera of a mobile device or a dedicated AR headset to capture images of the environment. The SLAM algorithm then analyzes the images to build a map of the environment and estimate the user’s position and orientation within the map. This information is used to accurately place virtual objects into the real world and to ensure that they maintain their position relative to the real-world environment.

SLAM is particularly important in AR because it enables a seamless and immersive experience for the user. Without accurate tracking of the user’s position and orientation, virtual objects in AR may appear to float in mid-air or move around in an erratic way, which can be disorienting and detract from the user’s experience. By using SLAM to accurately track the user’s position and orientation, AR applications can create a more realistic and immersive experience.

SLAM also enables AR applications to recognize and interact with the real-world environment. For example, an AR application might use SLAM to recognize a table or a chair in the real world and then place a virtual object on top of the table or next to the chair. This interaction with the real world enhances the user’s experience and makes AR applications more useful and engaging.

Applications of SLAM

SLAM has numerous applications in robotics and computer vision. Some of these applications include:

- Autonomous vehicles: SLAM is used in self-driving cars to enable them to navigate autonomously in unknown environments.

- Robotics: SLAM is used in robotics for mapping and localization in various environments, such as warehouses, factories, and hospitals.

- Augmented Reality: SLAM is used in augmented reality applications to track the position and orientation of a camera or device in real time.

- Virtual Reality: SLAM is used in virtual reality applications to create 3D models of the environment and track the position and orientation of the user.

Challenges in SLAM

The SLAM problem is challenging due to several factors, including:

- Uncertainty: The sensors used for SLAM are often noisy, which introduces uncertainty in the measurements. This uncertainty must be accounted for in the SLAM algorithm.

- Computational Complexity: The SLAM problem requires processing large amounts of sensor data in real time, which can be computationally expensive.

- Sensor Fusion: SLAM often requires fusing data from multiple sensors, which can be challenging due to differences in sensor accuracy, frequency, and range.

- Mapping: Building an accurate map of the environment requires handling occlusions, loop closures, and the data association problem.

Features Of SLAM

- Map building: SLAM algorithms create a map of the environment using sensor data. The map can be 2D or 3D and may include features such as walls, obstacles, or objects.

- Pose estimation: SLAM algorithms estimate the position and orientation of the robot or camera within the map. This is known as pose estimation, and it is a critical component of SLAM.

- Sensor fusion: SLAM algorithms often use multiple sensors, such as cameras, laser range finders, or sonar sensors, to gather data about the environment. Sensor fusion involves combining data from multiple sensors to create a more accurate map and estimate the robot’s pose.

- Loop closure: In SLAM, loop closure occurs when the robot revisits a previously mapped location. SLAM algorithms must handle loop closures to ensure the accuracy of the map and pose estimates.

- Data association: Data association is the process of associating sensor data with features in the map. This is a challenging problem in SLAM, particularly when the environment is dynamic and features are moving or changing.

- Uncertainty modeling: SLAM algorithms must account for uncertainty in the sensor data and pose estimates. Uncertainty modeling involves estimating the probability distribution of the robot’s pose and the environment’s features.

- Real-time operation: SLAM algorithms must operate in real-time, which means that they must process sensor data quickly and accurately to provide timely pose estimates and map updates.

- Scalability: SLAM algorithms must be scalable to handle large environments and long-term operations. This requires efficient data structures and algorithms that can handle large amounts of data and computations.

- Robustness: SLAM algorithms must be robust to handle challenging environmental conditions, such as poor lighting, occlusions, or sensor failures. This requires careful sensor selection and robust algorithms that can handle noisy or incomplete data.

SLAM Algorithms

There are several SLAM algorithms, including:

- Extended Kalman Filter (EKF): The EKF is a recursive Bayesian filter that estimates the robot’s pose and the map of the environment. EKF is computationally efficient but can suffer from linearization errors and is not suitable for highly non-linear problems.

- Particle Filter (PF): The PF is a non-parametric Bayesian filter that estimates the robot’s pose and the map of the environment. PF is suitable for highly non-linear problems but can suffer from particle degeneracy problems.

- Graph-based SLAM: Graph-based SLAM formulates the SLAM problem as a graph, where the nodes represent robot poses and map features, and the edges represent sensor measurements. Graph-based SLAM is efficient and can handle loop closures and the data association problem.

Conclusion

Robots and cameras can function autonomously in uncharted or shifting surroundings thanks to SLAM, a key topic in robotics and computer vision. Autonomous vehicles, robots, augmented reality, and virtual reality are just a few of the many uses for SLAM. However, because of uncertainty, computational complexity, sensor fusion, and mapping, the SLAM problem is difficult to solve. To address these issues, several SLAM algorithms, such as EKF, PF, and graph-based SLAM, have been created.