Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. The Cloud Native Computing Foundation (CNCF) now maintains it after Google initially built it.

Kubernetes is used to manage containerized workloads and services. Software programs can be packaged and operated using containers, which are small and portable. Kubernetes provides a way to manage and orchestrate these containers, making it easier to deploy, scale, and update applications.

Some of the key features of Kubernetes include:

- Container orchestration: Kubernetes automates the deployment, scaling, and management of containerized applications.

- Service discovery and load balancing: Kubernetes automatically manages the network connections between services and distributes traffic across multiple instances of a service.

- Automatic failover: If a container or node fails, Kubernetes automatically replaces it with a new instance to ensure that the application continues to run smoothly.

- Resource allocation and scheduling: Kubernetes manages the allocation of resources (such as CPU and memory) to containers and schedules them based on availability and demand.

- Self-healing: Kubernetes monitors the health of containers and services and automatically restarts them or replaces them if they become unhealthy.

Kubernates Architecture:

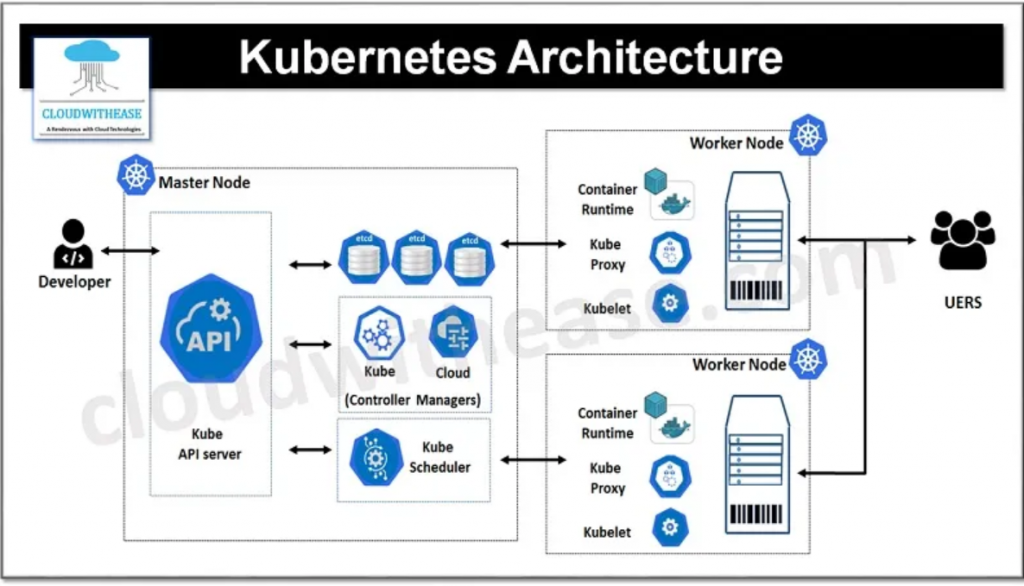

Kubernetes has a distributed architecture that consists of several components that work together to manage containerized applications. Here is a high-level overview of the Kubernetes architecture:

- Master node(s): The master node is the brain of the Kubernetes cluster and manages the overall state of the system. It includes several components:

- API Server: The API server is the front-end for the Kubernetes API, which is used to manage the cluster.

- etcd: etcd is a distributed key-value store that is used to store the state of the cluster.

- Controller Manager: The Controller Manager is responsible for managing controllers that regulate the state of the cluster, such as the ReplicaSet Controller and Deployment Controller.

- Scheduler: The Scheduler is responsible for scheduling workloads onto available nodes in the cluster, based on resource requirements and other constraints.

- Worker nodes: The worker nodes are the machines where containerized applications run. They include several components:

- Kubelet: The Kubelet is an agent that runs on each node and is responsible for communicating with the master node and managing the containers on the node.

- Container Runtime: The Container Runtime is responsible for running the containers, such as Docker or containerd.

- kube-proxy: The kube-proxy is a network proxy that runs on each node and is responsible for forwarding traffic to the appropriate container.

- Add-ons: Kubernetes includes several optional add-ons that provide additional functionality, such as:

- DNS: The DNS add-on provides DNS-based service discovery for the cluster.

- Dashboard: The Dashboard add-on provides a web-based user interface for managing the cluster.

- Ingress Controller: The Ingress Controller manages external access to services in the cluster.

Overall, the Kubernetes architecture is designed to be scalable, resilient, and flexible, allowing it to manage containerized workloads across different environments and infrastructures.

What language is Kubernetes?

Kubernetes is primarily written in the Go programming language, also known as Golang. However, Kubernetes provides APIs that can be used to interact with the platform using various programming languages, including Java, Python, Ruby, and others. Additionally, there are many client libraries and SDKs available for Kubernetes in different programming languages, which makes it easier to interact with Kubernetes programmatically.

How does Kubernetes work?

Kubernetes works by managing containers, which are lightweight, portable units of software that run applications and their dependencies consistently across different environments. Here is a simplified overview of how Kubernetes works:

- Define the desired state: You define the desired state of your application using a Kubernetes manifest, which is a YAML or JSON file that describes the containers, their configuration, and how they interact with each other.

- Create the resources: You use the Kubernetes API to create the necessary resources, such as pods, deployments, services, and replica sets, to implement the desired state described in the manifest.

- Schedule the containers: Kubernetes schedules the containers to run on available nodes in the cluster, taking into account factors such as resource constraints, affinity, and anti-affinity rules.

- Monitor the containers: Kubernetes continuously monitors the health of the containers and their underlying nodes. If a container or node fails, Kubernetes automatically replaces it with a new one.

- Scale the containers: Kubernetes can automatically scale the number of containers up or down based on demand, using strategies such as horizontal scaling and auto-scaling.

- Manage the networking: Kubernetes manages the networking between containers and services, providing load balancing, service discovery, and routing.

- Manage the storage: Kubernetes manages the storage for containers, providing options for persistent storage and dynamic provisioning.

What are the Pros and Cons of Kubernetes?

Kubernetes is a powerful container orchestration platform that offers many benefits, but it also has some limitations and drawbacks. Here are some of the pros and cons of using Kubernetes:

Pros:

- Scalability: Kubernetes can scale applications horizontally and vertically, allowing you to handle increased traffic and workload demands.

- High availability: Kubernetes can detect and automatically recover from container and node failures, ensuring that your applications are always available.

- Portability: Kubernetes allows you to run containerized applications across different cloud providers and on-premises infrastructure, making it easier to move workloads between environments.

- Flexibility: Kubernetes provides a wide range of configuration options and extensions, allowing you to customize the platform to meet your specific needs.

- Resource optimization: Kubernetes can optimize resource utilization by automatically allocating resources based on demand and releasing unused resources when they are no longer needed.

- Community support: Kubernetes is an open-source project with a large and active community, providing a wealth of resources, tools, and best practices.

Cons:

- Complexity: Kubernetes is a complex platform that requires a significant amount of expertise to deploy and manage, making it challenging for small teams and startups.

- Learning curve: The learning curve for Kubernetes can be steep, especially for developers who are not familiar with containers, microservices, and distributed systems.

- Overhead: Kubernetes adds an additional layer of abstraction and overhead, which can impact performance and increase operational costs.

- Maintenance: Kubernetes requires ongoing maintenance and updates, which can be time-consuming and expensive.

- Vendor lock-in: Some cloud providers offer managed Kubernetes services that can make it difficult to switch to a different provider or move workloads to an on-premises environment.

- The complexity of networking: Kubernetes provides a complex networking model that requires careful planning and management to avoid network issues.